Pulse of Information

Your source for the latest insights and updates.

When Cars Drive Themselves, Who’s in the Driver’s Seat?

Explore the future of self-driving cars and discover who's really in control. Buckle up for a wild ride into autonomy and ethics!

The Future of Autonomy: Who Takes Charge in Self-Driving Cars?

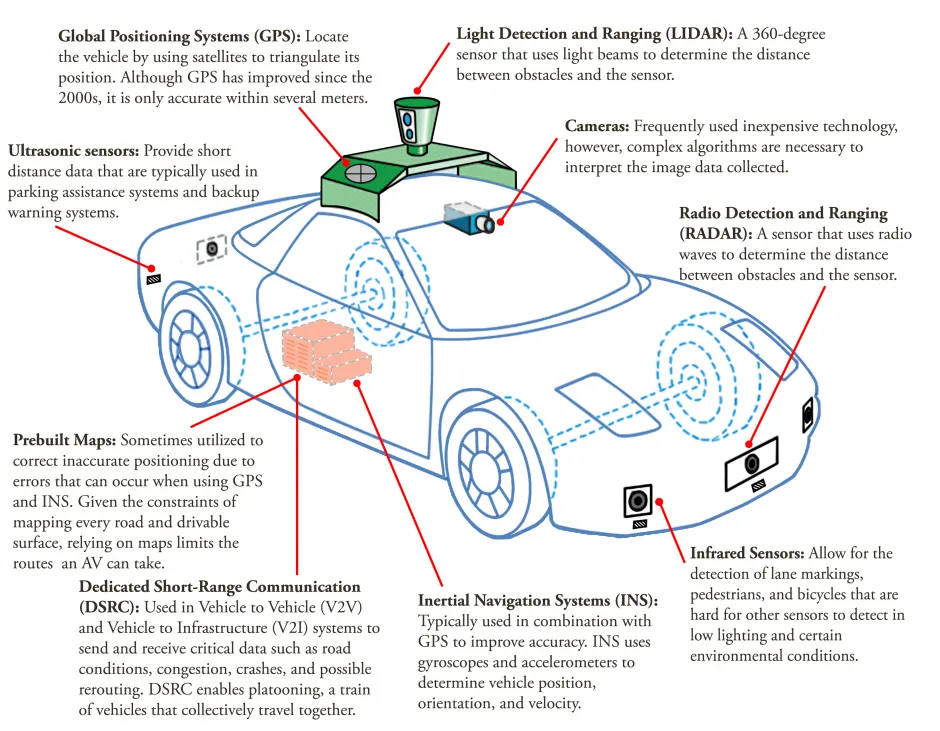

The future of autonomy in self-driving cars is rapidly evolving, prompting discussions on who will take charge of these sophisticated vehicles. As artificial intelligence (AI) becomes more integrated into our transportation systems, the roles of manufacturers, software developers, and regulatory bodies will become increasingly intertwined. Key players in the automotive industry are competing to establish standards and guidelines, ensuring that self-driving technology is both safe and efficient. This race for dominance could lead to a variety of autonomous driving models, where different stakeholders hold varying degrees of control over the vehicle's operation.

Moreover, the question of responsibility in the event of an accident remains a pressing concern. As we look ahead, it will be essential to consider liability and accountability in the context of self-driving vehicles. Should the car manufacturer, the software developer, or the human occupant be held responsible? Legal frameworks are likely to evolve to address these challenges, and public opinion will play a significant role in shaping the future of autonomy. Ultimately, collaboration between technology developers, lawmakers, and society at large will determine who truly takes charge in the realm of self-driving cars.

Navigating Liability: Who’s Responsible When Cars Drive Themselves?

The advent of autonomous vehicles has revolutionized the automotive industry, raising significant questions about accountability in the event of accidents. When cars drive themselves, determining who is at fault becomes increasingly complex. Traditional notions of liability typically place responsibility on the human driver. However, with the introduction of advanced technologies, such as machine learning and artificial intelligence, the lines blur. In cases where an accident occurs, is the driver, the manufacturer, or even the software developer liable? Understanding this evolving landscape is crucial for consumers, manufacturers, and legal professionals alike.

As we navigate the future of transportation, it's essential to consider the implications of liability and insurance policies tailored for self-driving cars. Experts suggest that a comprehensive legal framework will need to be established to address these new challenges. For instance, it may be necessary to create a no-fault insurance system specifically for autonomous vehicles, where liability is assessed based on the technology's performance rather than the actions of a human driver. Ultimately, as autonomous driving technology matures, consumers will need to stay informed about their rights and responsibilities in this new era of transportation.

Self-Driving Cars and the Ethical Dilemma: Who’s in Control?

The advent of self-driving cars has ushered in a new era of transportation, but it has also sparked a significant ethical dilemma regarding control and responsibility. When a self-driving vehicle encounters an unavoidable accident, questions arise: who is to blame—the manufacturer, the software developers, or the owner of the vehicle? As these autonomous systems make decisions in real-time, the complexity of programming ethical guidelines becomes evident. As outlined by experts, programmers must consider scenarios such as how to prioritize the safety of passengers versus pedestrians, creating a moral calculus that is not easily defined.

Furthermore, the shift towards autonomous vehicles calls for a reevaluation of existing laws and regulations. Policymakers face the challenge of developing frameworks that address the safety and liability of self-driving cars. There are concerns about the potential for bias in decision-making algorithms, leading to disproportionate risks for certain groups. As the debate continues, society must grapple with the question of who's in control: is it the algorithms, the manufacturers, or ultimately the consumers? The future of transportation hinges not just on technological advancement but also on how we navigate these ethical dilemmas.